State Spotlight: New York and California

In a recent X post, OpenAI’s CEO Sam Altman teased plans to release a new version of ChatGPT that can “respond in a very human-like way,” “act like a friend,” and for verified adults “allow even more, like erotica.” Earlier this year, Elon Musk’s Grok chatbot on xAI released a companion feature with two 3D animated characters: Ani (an anime-style female character) and Rudy (a red panda who can operate in a vulgar version known as “Bad Rudy”). Other platforms, such as Meta AI, also offer companion chatbots designed to act as assistants, friends, or even romantic partners. As interest in AI companions has grown, so too has concern over potential risks, particularly for children. Lawmakers have pushed to keep pace with this rapidly evolving field.

In this edition of our State Spotlight series, we examine how New York and California are addressing the emergence of AI companions, and we offer risk mitigation insights for businesses impacted by new regulations.

Leading AI Companion Regulation Efforts: New York & California

New York and California have both enacted laws regulating AI companions: New York’s A3008C, effective November 5, 2025, and California’s SB 243, effective January 1, 2026. In general, these bills seek to regulate AI companions capable of sustaining human-like relationships across multiple interactions. More specifically, California’s bill applies to companions “capable of meeting a user’s social needs…by exhibiting anthropomorphic features” but does not define these terms. New York’s bill applies to companions capable of “retaining information on prior interactions or user sessions [and] asking unprompted or unsolicited emotion-based questions that go beyond a direct response to a user prompt.” The New York bill does not define these terms, and in particular “prior interactions or user sessions,” which could be interpreted in several ways.

Both California and New York explicitly exempt categories of AI bots related to customer service, business purposes, productivity, research, and technical assistance. California also exempts video game bots and stand-alone consumer devices acting as voice-activated virtual assistants.

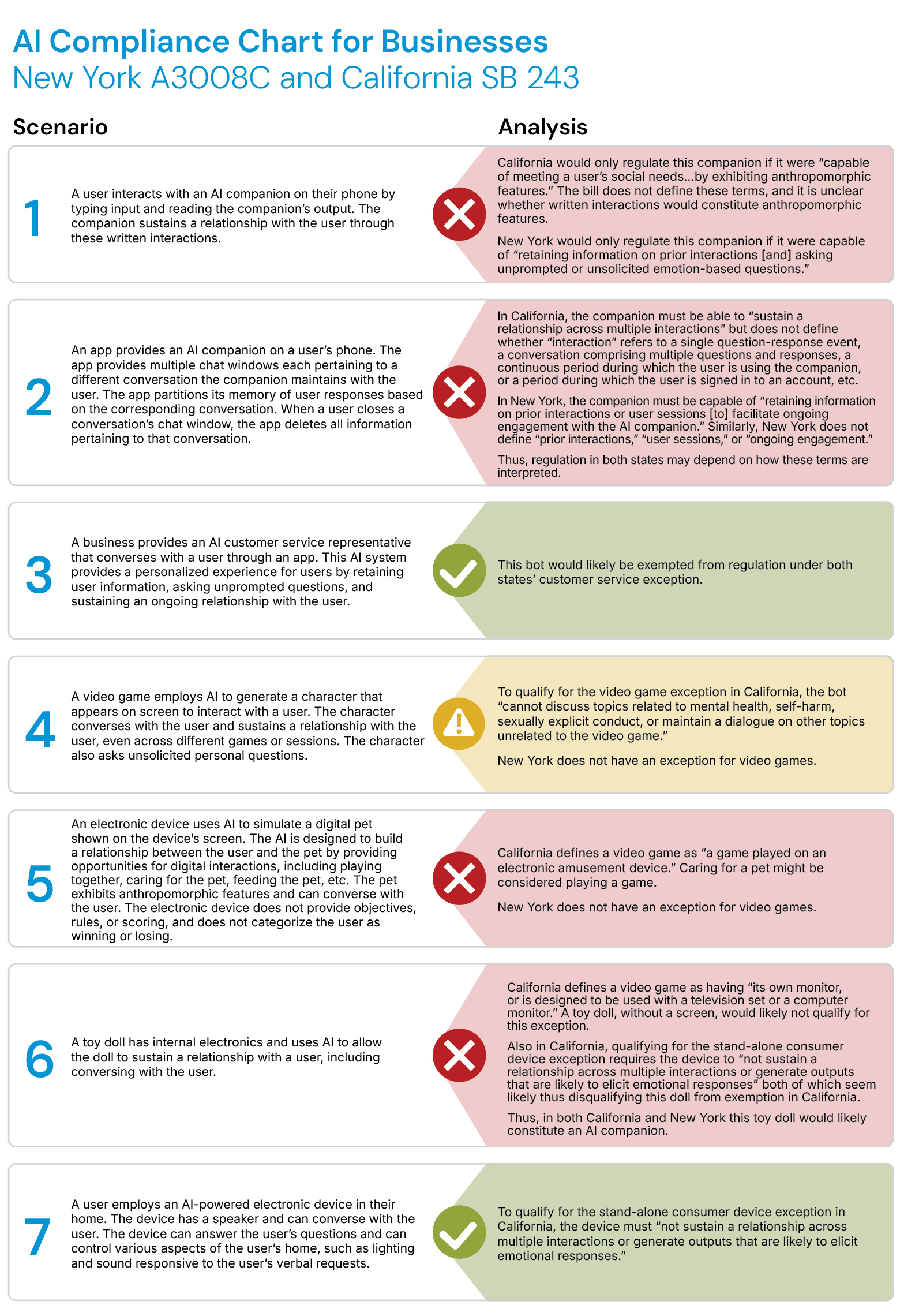

The chart below examines a set of hypotheticals to better understand the scope and application of these laws.

For AI companions that fall under regulation of the laws, both California and New York require notifying users that the AI companion is not human. New York requires these notifications at the beginning of every interaction and every three hours for continuing interactions. Both states also require businesses to implement a protocol for addressing suicidal ideation and self-harm content, including referring users to a crisis service provider (e.g., suicide hotline). California requires AI operators to publish details of the protocol on their website.

Additional Requirements in California

California takes additional measures to protect minors. Businesses must disclose that an AI companion may not be suitable for some minors. And for users that are “known to be minors,” businesses must:

- Disclose that the user is interacting with AI.

- Remind the user every three hours during continuing interactions to take a break and that the AI companion is not human.

- Prevent the AI companion from producing visual material of sexually explicit conduct or directly stating that the minor should engage in sexually explicit conduct.

Beginning July 1, 2027, businesses must also provide an annual report including the number of referrals that were issued for crisis service providers and protocols put in place relating to suicidal ideation.

Enforcement

In New York, the attorney general can seek up to “$15,000 per day for a violation;” however, the precise parameters of “a violation” could be open for interpretation. Consider whether providing a single violative AI companion to 10 users would constitute a single violation or 10 violations, or perhaps five violations if only five users use the AI companion in a given day. For context, Meta CEO Mark Zuckerberg recently announced that Meta AI has over a billion monthly active users.

In California, individuals who suffer from violations can seek actual damages, thus allowing for potentially significant liability.

Key Takeaways

Whether in New York or California, businesses should determine whether their AI systems constitute “companions” under the respective laws. This includes businesses that create or distribute AI as well as businesses that incorporate existing AI into their products or services. Those likely to be categorized as such should:

- Prepare for the required disclosures, including notifying users that the companion is not human.

- Create and implement protocols for detecting and addressing suicidal and self-harm ideations, including generating hotline referrals.

- In California, businesses should assess their knowledge of whether users are minors and should prepare to implement the notifications and guardrails specific to minors. Businesses should also prepare for the annual reporting requirement.

- Businesses should also ensure that disclosures do not compromise proprietary information and IP-related strategies, particularly in California which requires publication of protocols for addressing suicidal ideation and self-harm content.

AI compliance laws vary widely by jurisdiction, underscoring the importance of closely monitoring developments in the regulatory landscape. Beyond New York and California, Utah was among the first states to implement an AI disclosure law.

To learn more about how Knobbe Martens can help your organization navigate the complex AI regulatory environment, click here.